Game Arena is a public "testing ring" for top AI models, created as a joint project by Kaggle (Google's data science platform) and Google DeepMind. The goal is to compare models in games where strategy, adaptation, and handling uncertainty are key. Poker fits perfectly here as a textbook example of imperfect information (you can't see opponents' cards), variance, and risk management – elements that "language" models test differently than chess. In practice, models play a massive number of hands through environments that textually describe situations to them.

In the poker section of Game Arena, models were tested in heads-up NLHE, and GPT-5.2 was the most profitable participant. Reports mentioned a specific figure: approximately 180,000 hands played with blinds of $1/$2 and a resulting profit of $167,614. Essentially, this is a "simulated cash game" benchmark, showcasing how the model performs in games with incomplete information – precisely the environment where it's not enough to just be good at calculating combinations; evaluating ranges, frequencies, and timing aggression correctly is also necessary.

Exhibition vs. Final Leaderboard

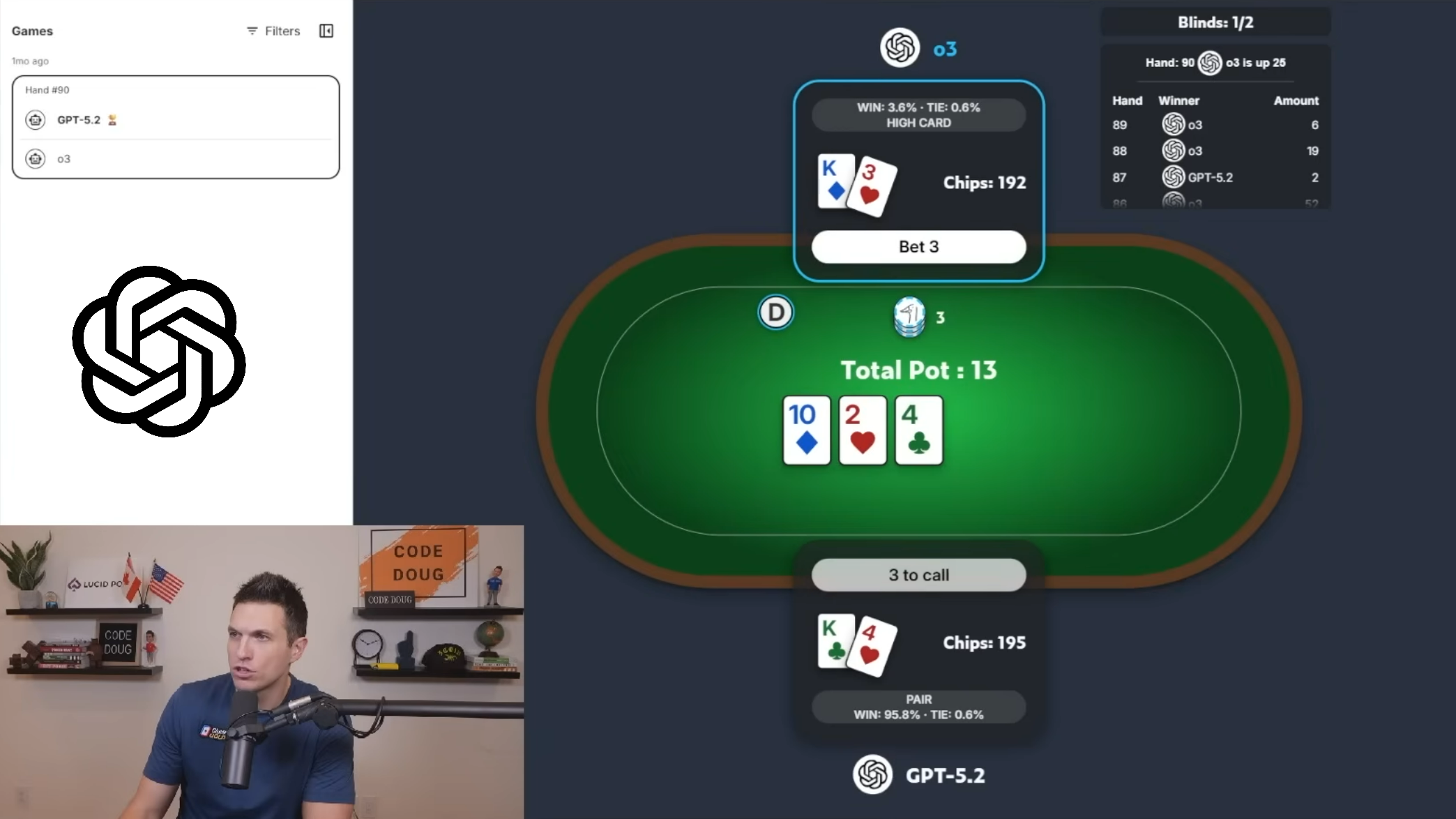

Another piece of information spread on social media: in a livestreamed bracket (exhibition "bracket"), the final saw the model o3 triumph over the mentioned GPT-5.2. This detail warrants an explanation. The bracket was an audience-friendly format – a three-day show with selected matches and commentary. The final leaderboard, on the other hand, was based on a much larger number of games and served as a more robust comparison of performance. In other words, o3 might have won the "televised" final, but in overall long-term evaluation, GPT-5.2 emerged as the best poker model.

Game Arena caught attention not only with numbers but also with gameplay style. Several reactions from the poker community mentioned the significantly aggressive approach of the models, which seemed "inhumanly" consistent at times. That's why it was essential to have well-known names like Doug Polk, Nick Schulman, Liv Boeree, and Hikaru Nakamura accompanying the broadcast. Their commentary highlighted an interesting point: yes, the models can pressure opponents and create uncomfortable decisions, but sometimes they also reveal peculiar "logical holes" in reasoning through situations. For fans, it was entertaining. For online poker, it's a reminder that the issue of game integrity and bots will become increasingly relevant.

What do professionals say about AI poker battles?

What Does This Mean for Poker?

This isn't the story of "AI definitively solving poker." It's more a signal that universal models are already able to operate at a level in controlled benchmarks that commands respect – and questions. If poker aims to defend against unfair advantages in the future, these open tests and public comparisons can show where models are strong, where they falter, and what it actually means to "play well" in an era when bluffing is no longer just a human skill.

Sources – YouTube, PokerStrategy, GitHub, Blog.Google, Science.org